Amazon.com: Time Series: Modeling, Computation, and Inference, Second Edition (Chapman & Hall/CRC Texts in Statistical Science): 9781498747028: Prado, Raquel, Ferreira, Marco A. R., West, Mike: Books

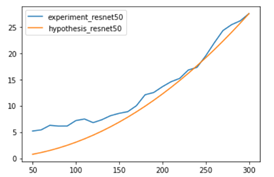

The Correct Way to Measure Inference Time of Deep Neural Networks | by Amnon Geifman | Towards Data Science

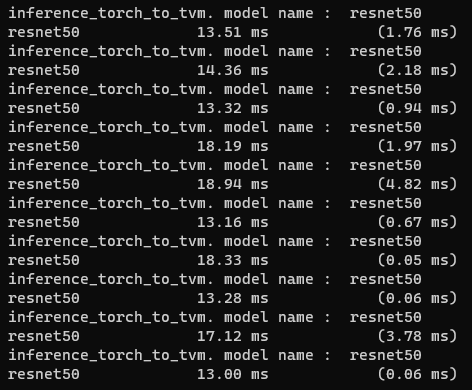

Difference in inference time betweeen resnet50 from github and torchvision code - vision - PyTorch Forums

Speed-up InceptionV3 inference time up to 18x using Intel Core processor | by Fernando Rodrigues Junior | Medium

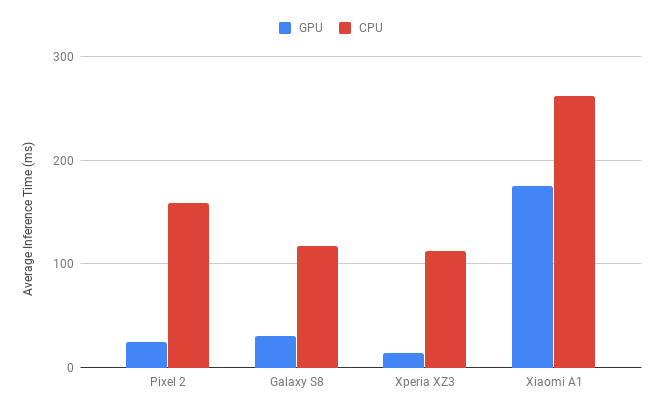

![PDF] 26ms Inference Time for ResNet-50: Towards Real-Time Execution of all DNNs on Smartphone | Semantic Scholar PDF] 26ms Inference Time for ResNet-50: Towards Real-Time Execution of all DNNs on Smartphone | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/2824181f87c6d047b81801faf1652310b98b1cad/3-Figure2-1.png)

PDF] 26ms Inference Time for ResNet-50: Towards Real-Time Execution of all DNNs on Smartphone | Semantic Scholar

System technology/Development of quantization algorithm for accelerating deep learning inference | KIOXIA

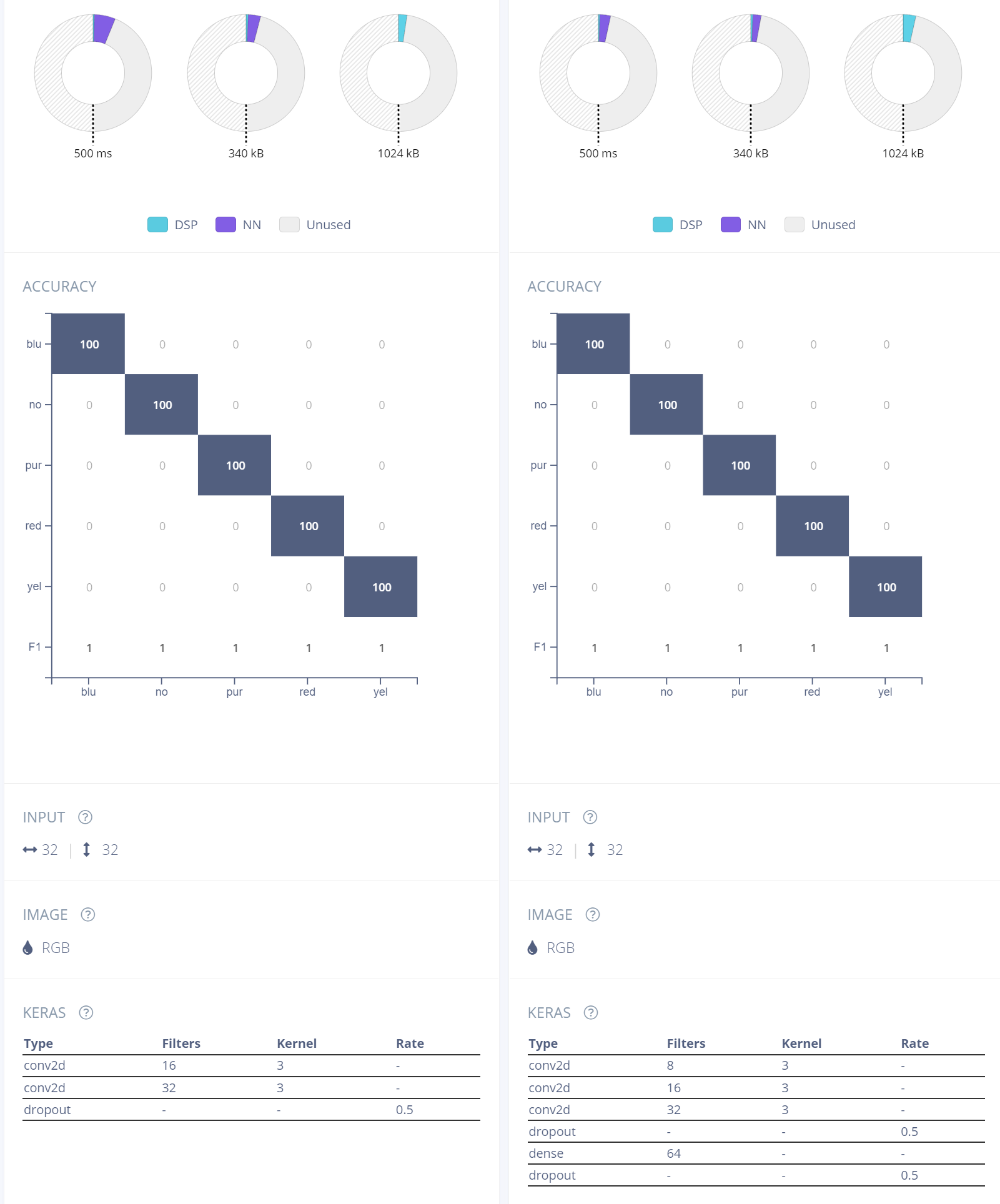

Figure 9 from Intelligence Beyond the Edge: Inference on Intermittent Embedded Systems | Semantic Scholar

![PP-YOLO Object Detection Algorithm: Why It's Faster than YOLOv4 [2021 UPDATED] - Appsilon | Enterprise R Shiny Dashboards PP-YOLO Object Detection Algorithm: Why It's Faster than YOLOv4 [2021 UPDATED] - Appsilon | Enterprise R Shiny Dashboards](https://appsilon.com/wp-content/uploads/2020/09/pp-yolo-frameratevsmethod.png)

PP-YOLO Object Detection Algorithm: Why It's Faster than YOLOv4 [2021 UPDATED] - Appsilon | Enterprise R Shiny Dashboards

Efficient Inference in Deep Learning — Where is the Problem? | by Amnon Geifman | Towards Data Science

How Acxiom reduced their model inference time from days to hours with Spark on Amazon EMR | AWS for Industries